About a month ago, I published a post about privilege escalation. Well, I should say it wasn't the right approach. Or better put it this way: it's only an option. I have had several discussions with the Unix & Ansible gurus and have chosen the better approach: authentication with the public key.

Here are a few points why I've changed my mind.

There is nothing alike to the good old init file format, but this inventory is well-structured and as most of the YAML documents are self-explanatory.

Here are a few points why I've changed my mind.

- The first one is purely logical. When you change your session to another user, you don't elevate your access, you just act as the other user with about the same privileges in the system

- You don't need to seed additional scripts through inventory. You still need to push your public key, but it's an entirely different case.

- An additional script is a potential troublemaker even if lines in the script less than fingers on your arms.

- You don't need to alter ansible configuration, and there are no more become* variables unless you really need to become root.

Environment description and prerequisites

Before I dive into my inventory entries and playbook tasks, let's refresh why I'm doing this, and how we will use SSH public key authorization.

As I have explained in the previous post, quite often you have no password nor direct command execution privileges for the application owner account, in most of my cases I don't have a password for user oracle, nor I can't execute commands similar to su oracle -c "echo $(id)".

The only command is available: sudo su - $target_user

Even with all that restrictions, I can configure a password-less connection as the target user, if my public SSH key is in the authorized keys list. You are welcome to google all the configuration steps, or just read this article, to see how to create your keys. Double check that you:

As I have explained in the previous post, quite often you have no password nor direct command execution privileges for the application owner account, in most of my cases I don't have a password for user oracle, nor I can't execute commands similar to su oracle -c "echo $(id)".

The only command is available: sudo su - $target_user

Even with all that restrictions, I can configure a password-less connection as the target user, if my public SSH key is in the authorized keys list. You are welcome to google all the configuration steps, or just read this article, to see how to create your keys. Double check that you:

- Connected to the Ansible controller with your account

- You have ~/.ssh folder, and it has mode 700 (only the owner has read, write, and execute privileges)

- This folder contains id_rsa and id_rsa.pub files.

Inventory redesign

I'm not going to use all those became_* instructions and variables so it would be better to start with the new inventory. Let's make the syntax consistent across the environment and rewrite the demo inventory in the YAML format. After the complete redesign, my new all_hosts.yml content is below:

all:

hosts:

localhost:

children:

ora_group:

hosts:

fed28.vb.mmikhail.com:

vars:

ansible_user: oracle

jbs_group:

hosts:

fed28:

vars:

ansible_user: jboss

- Top level entry all contains all the hosts or groups of hosts, as well as vars: (omitted in my case) and children: elements.

- All host groups are listed under children: element. My example has one ungrouped host - localhost (under all/hosts) and two groups in the all/children branch.

- You can define global, group-level or host-level variables, using standard YAML notation.

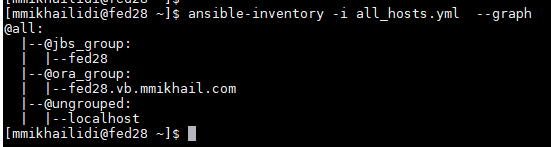

You can check inventory syntax with the ansible-inventory command. I prefer to build a tree, it gives you a compact ASCII picture of your inventory:

In the next section, we add our public key to the authorization lists and retest user access.

Automate key delivery

If you have a handful of controlled hosts, you could deliver your public key manually but what the point? It's all about the automation and minimizing human errors, means let's script it and reuse it someday. Projected playbook should perform tasks as follow:- Check if your public key not in the target user's ~/.ssh/authorized_keys file.

- If it is no - copy it to the target host and add to the list of the authorized keys

- Remove temporary files at the end.

Take a look at the playbook below. It implements all the steps from our list. Simple isn't it?

---

## Playbook helps you to configure key-based SSH authentication

## when you don't have

- name: Copy user public key to targets

hosts: all

vars:

key_file: "/tmp/{{ansible_user_id}}.pub"

key: "{{lookup('file','~/.ssh/id_rsa.pub')}}"

target_user: oracle

tasks:

- name: check if key exists

shell:

cmd: |

sudo su - {{target_user}} <<EOF

grep "{{key}}" ~/.ssh/authorized_keys |wc -l

EOF

executable: /bin/sh

register: keys_found

- block:

- name: Update key authorization

shell:

cmd: |

sudo su - {{target_user}} <<END

[ ! -e ~/.ssh ] && { mkdir ~/.ssh; chmod 700 ~/.ssh; }

echo "{{key_file}}" >> ~/.ssh/authorized_keys

chmod 644 ~/.ssh/authorized_keys

END

executable: /bin/sh

when: keys_found.stdout == "0"

...

I have found a few tweaks, that could be very useful and you wouldn't see them in the "Ansible 101" courses and videos:

- The target audience for the script is all hosts, I will limit the scope for the specific group with command line arguments.

- Variable target_user specifies which user will be used for the public key registration. At the run-time, we will overwrite it to and register key with jboss as well.

- Playbook variable key is populated from the local key file with the lookup plugin. It allows you to avoid additional file manipulations on the controlled host and use value directly to check if the key already exists.

- The script uses the same findings from the previous post, although this time it's incorporated into the playbook. The shell module has been used in some twisted way, but it cuts all the leading spaces and executes the multi-line content precisely as I want it. If you put the same multiline as shell: | it will preserve all the leading spaces and execution will hang.

Let's install the key for the oracle servers:

Two arguments allow us to successfully execute the playbook:

- Option -l overwrites the directive hosts: from the playbook and limits the scope to the hosts in the group.

- Option -e overlaps ansible_user variable, defined in the inventory.

$ ansible-playbook -l jbs_group -e "ansible_user=mmikhailidi target_user=jboss" \

ansible/books/post-pub-keys.yml

You can rerun script multiple times, it will alter controlled hosts only if your key doesn't exist in the list of the authorized_keys.

Results and conclusions

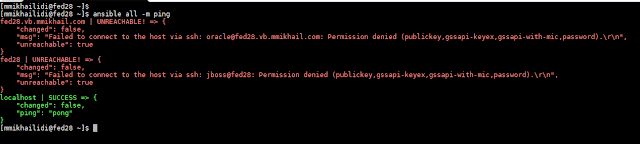

Now, all hosts in the inventory are ready to receive commands from the Ansible controller. To check connectivity, let's collect user information from the servers.

The command executes successfully cross the inventory, using the predefined accounts. The only issue I can see here, you need explicitly overwrite username if you need to run something under your own name. In the most environments, I have worked with, it's not an issue at all.

2 comments:

I like a solution from https://blog.mmikhail.com/2018/09/ansible-how-to-become-different-person.html post better, as it is compatible with Ansible privilege escalation approach.

Post a Comment